Table of contents

Do not edit: TOC will be auto-generated

This is the first post in a three-part series on how DevOps and platform engineering teams can leverage DevOps skills and mindsets to cultivate a culture of FinOps awareness that delivers business value within cloud environments.

“Move fast and break things” is one of the most quoted truisms in modern software engineering. DevOps has changed the way engineers work—we ship faster, iterate constantly and recover quickly. In the cloud, speed without controls may not disrupt production, but it can certainly break your budget.

You can roll back code, but you can’t roll back a cloud bill

In cloud environments, FinOps and DevOps must work in sync—not just in parallel—or costs spiral out of control. Since infrastructure can be deployed, changed or deleted at the speed of a commit, cost impacts must be just as visible, testable and fixable as any bug in the production system.

Here is the pattern consistent in most organizations:

- DevOps teams deploy at speed but miss cost impacts until the invoice hits next month

- FinOps teams analyze spend, but often catch problems too late to influence outcomes

- Leadership wants the best of both worlds—delivery velocity and financial controls—without human gates slowing the process

The simple fix is often overlooked. Combine FinOps signals where engineering quality is enforced: the pipeline. When you pivot and treat cloud costs like bugs in code—visible, testable and fixable—you eliminate surprises while delivering maximum business value.

When speed meets separation

DevOps was game-changing for software developers, transforming cloud utilization. Using tools like Terraform, OpenTofu and Pulumi, infrastructure may now be described with precision and consistency. The irony? The incredible flexibility of these tools also allows for the possibility of waste just as easily. For example, a single Terraform module can spin up thousands of nodes or provision petabytes of storage without asking for confirmation. Without proper cost controls, the blast radius of mistakes only increases.

The $5k wake-up call

A few years ago, my team deployed a complex feature release “the old way,” with a single, big, bundled release late on a weeknight with code that was handed to us from development. Everything initially tested clean. But we missed one detail: our development environment’s telemetry was still active. By morning, production was down and we had to spend hours resolving a severity one incident by rolling back the release to identify the root cause of the problem.

Then came the kicker: A $5,000 hit from unexpected infrastructure and storage costs. Our pipeline did everything correctly—just without considering costs.

That incident was our team’s FinOps wake-up call. It became apparent that, just as we test new code changes before merging them into a branch, we needed a way to validate cost impacts before deployment. We wouldn’t ship untested code, so why would we deploy uncosted infrastructure?

We made two key changes to our approach:

- Smaller, more frequent releases: Changes are easier to track (and test) while potential rollbacks are less impactful with more signals.

- New cost guardrails: We now explore how to include cost guardrails in our pipeline. These automated controls aren’t blockers; they are sanity checks to prevent runaway spending.

The result? We achieved a 75% reduction in unexpected cloud costs, reduced our time to cost detection from weeks to minutes, and improved code quality. This enabled our team to spend more time solving real problems and less time chasing invoices.

Introducing the FinOps as Code framework

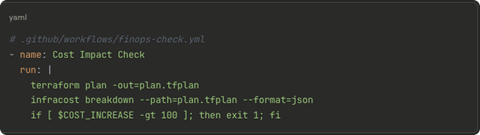

FinOps as Code (FAC) framework isn’t about perfect cost optimization. It’s about using actionable signals while applying intelligent controls that show engineering decisions can drive business value. The FAC framework consists of four key concepts that can be integrated into an existing software development life cycle (SDLC): signals, guardrails, automation and attribution.

The mantra of the FAC framework should be:

Treat cost signals like unit tests in code—run them before merging

This allows the integration of four key concepts into an existing SLDC:

- Signals: Catch cost spikes before they hit production.

- Guardrails: Prevent expensive mistakes automatically.

- Automation: No manual approvals, just smart defaults.

- Attribution: Every dollar must trace back to a team and a feature.

Just as DevOps automates low-value, high-effort tasks, FinOps as Code removes the manual gates and enforces automated cost controls. Engineers can now focus on building platforms instead of managing cloud commitments or chasing cost anomalies, leading to a more productive and efficient workflow.

Why most FinOps tools miss the mark

Many of the existing solutions for FinOps tasks tend to silo cost optimization and commitments as a finance problem. But if we want engineers to care about and practice FinOps, then we need tools that integrate with how they already work—using code, automation and observable systems. The FAC Framework provides a more integrated approach to cost optimization, making it more accessible and actionable for engineers.

A few key technologies make this practical:

- When Kubernetes clusters need intelligent scaling: Tools like Flexera’s Container Optimization (formerly Spot Ocean) automatically blend spot (excess capacity), reserved and on-demand infrastructure while keeping workloads running. No manual capacity planning or headroom tweaking is required; just resilient infrastructure that optimizes itself.

- When you need commitment utilization without complexity: Solutions like Flexera Cloud Commitment Management turn RI/SP/CUD management into an API call instead of a procurement headache. Your Infrastructure as Code can optimize for cost in the same way it provisions infrastructure.

- When you need workload-level cost visibility: OpenCost (A CNCF Incubated Project) provides standards around attribution that make incoming signals and pipeline guardrails useful. Now you can finally answer “What does this microservice cost to run?” without a finance degree or a master’s-level class in spreadsheet translation.

These aren’t cost tools with automation—they are automation tools that optimize costs. They work how engineers think: declaratively, programmatically and observably.

Start where you are

One of the questions I get often when talking about this concept is “How can we get started?” You don’t need a comprehensive policy library and build-out on day one. Pick one or two high-signal checks.

For example, start with:

- Tag validation: Make sure any code shipped meets current tagging policies.

- Scaling limits / enforce spot usage

In this method, we use education and warnings to drive compliance and provide workarounds. By utilizing tools we already have in production, we can gate-check, and this changes how engineers view FinOps—as an active, continuous exercise in managing budget and infrastructure to deliver maximum business value.

Make these small changes, measure the results, expand what works and refactor what isn’t hitting the mark.

Stop chasing invoices, start engineering value

Ready to help FinOps, DevOps, engineering and product teams build resilient platforms that can run within cost controls? Enhance your engineering teams’ DevOps and FinOps practices by reaching out to one of our experts today.