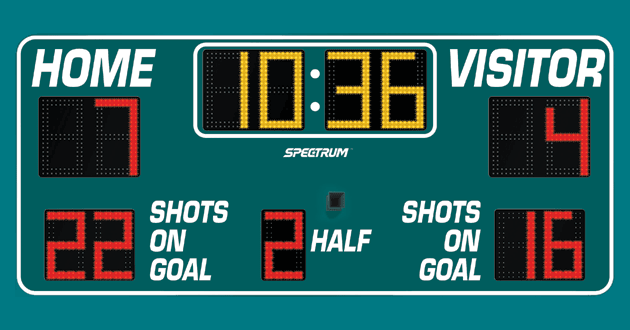

Nobody plays a sport and doesn’t keep score. But plenty of organizations implement a software asset management and license optimization program without any structured way to measure actual outcomes. This article seeks to provide some ideas on how to measure and track data, operations and outcomes from a program focused on optimizing the value and usage of software in an organization.

Business cases for a software asset management and license optimization program will present broad ideals of what ‘success’ looks like, such as:

- Reduce risk of unplanned payments from using unlicensed software

- Reduce risk of security breaches arising from use of unpatched software

- Save money on unneeded maintenance

- Avoid over-purchasing of software licenses

- Reduce the time and effort required to fulfill requests for new software

Crossing the gap from high level objectives like these to identifying how to actually measure outcomes can take some thought. The key principle to apply here is to work out what is meaningful and important, and seek ways to measure those things. [1]

Best-in-class organizations have a robust approach to monitoring and improving a range of metrics. Metrics will often be used as the basis for key performance indicators (KPIs) for teams who have responsibility for managing and influencing those metrics.

With that in mind, some sample metrics that may be useful to help with software license optimization are listed below. The metrics have been broken down into three areas:

- Input data metrics

- Operational metrics

- Financial metrics

Input data metrics

A software license optimization program heavily relies upon comprehensive and accurate input data about IT hardware and software assets within the organization. This data is best held within a central Asset Management Database (AMDB) containing all of the data gathered from multiple sources. Input data focused metrics are useful for assessing the trustworthiness of this asset data. They can be used to highlight areas where data may not be clean, and to help identify data gaps for remediation.

Examples are:

- % of active devices for which current hardware and software inventory is available.

- % of devices found on the network which are not recognized as known assets.

- % of devices found active on the network which have a status indicating they should not be active (for example, they are classified as being in storage or retired).

- % of devices found on the network with a bad (blank, duplicate or blacklisted) serial number.

- % of all IT assets in the organization that are represented in the AMDB.

- Number of IT asset purchases that are not yet represented in the AMDB.

- % of assets with no recorded location, business unit, etc.

- % of computer records with missing processor information (auto-discovery tools which gather inventory do not always reliably collect this data).

- Number of active individual-use assets with no assigned user.

- Number of assets which are assigned to people who have left the organization.

- % of virtual machines for which physical host information is not known.

Operational metrics

Operational metrics help to measure the operational performance of software license optimization activities. Examples are:

- % of application installations that have been mapped to normalized application details.

- Number of installations of commercial applications without a license.

- % of application installations which have not been used within the last (say) 90 days.

- Average number of different versions deployed of each application.

- % of assets which are not actively deployed (for example, hardware assets on the shelf, or software licenses not used).

- % of installations which are not using the latest patch level available from the software publisher.

- Number of installations of applications with known security vulnerabilities.

- Number of installations of applications which are unauthorized (prohibited) for use.

- % of installations which are of applications that have reached their end of support life.

- Average time taken to fulfill an end user’s request for new software.

Financial metrics

Financial metrics are useful for measuring the outcomes from a software asset management and license optimization program in terms of the value or cost to the organization. Examples are:

IT Asset Management

It all starts with knowing what’s in your IT ecosystem. Flexera One discovers even the most elusive assets whether on-prem, SaaS, cloud, containers and more.

- Value of software installations proactively removed.

- Value of maintenance not renewed.

- Value of software requests fulfilled from existing software license entitlements (i.e. without having to purchase new licenses).

- Value of unlicensed deployments identified and remediated.

- % of software licenses under maintenance which are currently used.

- Value of contingency on the company’s balance sheet or allocated in budgets for unplanned software license liabilities (i.e. due to software audit true-up fees)

- Number of software vendor audit notifications received per year.

- Labor costs associated with responding to software license audits (and/or average cost per audit).

- Costs paid arising from software license audit findings per year.

- Number of security incidents arising from software vulnerabilities per quarter.

- % of license and maintenance costs that are charged back to other parts of the business.

- Ratio of the value delivered to the organization from the SLO program to the full time equivalent people supporting the program.

What are your favorite SAM metrics? How do you go about measuring them?

To learn more, please visit our website.

[1] Of course, some meaningful things in life are very hard to measure. However don’t grab defeat from the jaws of victory and let that get in the way of measuring what can be measured.