This article is the second in a series describing how the development team at RightScale does continuous integration (CI) and continuous delivery (CD) in the cloud. The first article provided an overview of CI/CD in the cloud and described the key features of the RightScale platform that we leverage.

In this article, I will describe how the RightScale development organization leverages Jenkins, various test tools, and the RightScale platform, itself, to automate testing of RightScale ServerTemplates across a large matrix of clouds and operating systems.

The Problem: A Test Matrix from Hell

As part of our solution, RightScale develops and delivers pre-configured ServerTemplates for popular technologies and stacks, such as LAMP, MySQL, and more for both Linux and Windows. These ServerTemplates are designed to operate across a broad number of clouds (AWS, Azure, Google, Rackspace, CloudStack, OpenStack, VMware vSphere, and more) as well as a variety of operating systems such as CentOS, Ubuntu, RHEL, and Windows.

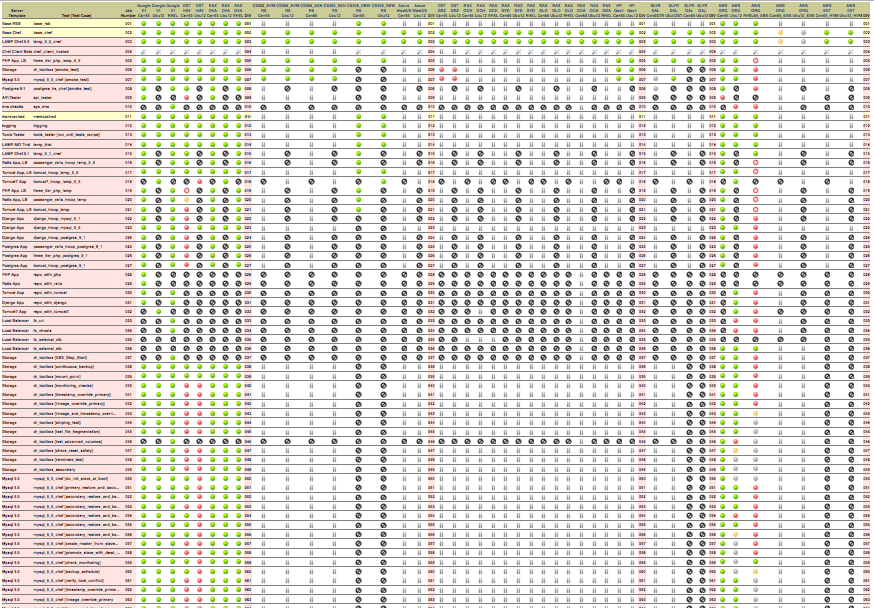

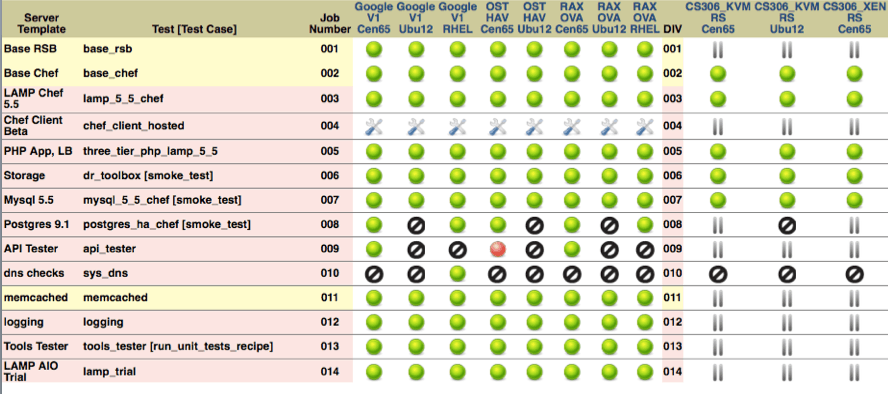

Since RightScale provides ongoing technical support and bug fixes for these ServerTemplates, its critical that we test them across all of the platform/OS combinations we support. As the number of ServerTemplates, tests, and clouds grew, the result was a test matrix that had as many as 2,500 possible combinations:

Although we were using Jenkins as a front end to our homegrown regression testing tool, VirtualMonkey, the amount of time and manual effort to set up the test matrix (which was originally a GoogleDoc), execute the tests, and review the results quickly became unmanageable. In addition, each cell in the above matrix represented a test that might take 20 minutes to 2 hours. We needed to balance running tests in parallel to reduce the elapsed time with serializing tests in order to manage the cloud capacity and budget required.

Our Solution: RocketMonkey, Jenkins, and RightScale

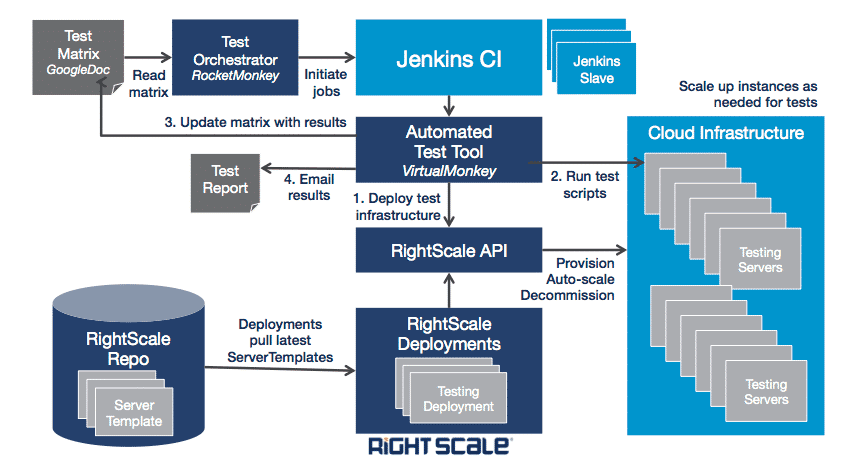

To solve this problem, we developed a new tool, RocketMonkey, that reads a test matrix from a GoogleDoc and translates each cell intoindividual jobs loaded into Jenkins. RocketMonkey then drives these jobs via the Jenkins API. RocketMonkey also allows us to define what tests run in parallel and how tests are sequenced.

After the jobs are configured, the test activity is coordinated by RocketMonkey, which selectively triggers jobs in Jenkins, which in turn uses VirtualMonkey to execute the test scripts. The test scripts call the RightScale API to provision, auto-scale, and deprovision cloud deployments as required for each tests. These cloud deployments are defined within RightScale and pull the latest versions of the ServerTemplates that are under test.

Cloud Management

Take control of cloud use with out-of-the-box and customized policies to automate cost governance, operations, security and compliance.

RocketMonkey provides control of the test execution at a high level, allowing us to address several issues that are a direct result of large-scale testing. It can be configured to link verticals or rows in the test matrix, allowing for running tests on a specific cloud or the same test across all the clouds at will. The tests can be linked to run serially, so they consume a limited level of cloud resources while they run overnight unattended or in parallel to provide time efficiency.

As tests complete, a generated HTML report based on the original GoogleDocis automatically updated with a status icon so that the QA team can easily see how the tests are progressing. The automation can also be set to auto-halt testing if repeated failures are detected, say, for example, if there are network problems. Finally, one of the biggest time savings is in the automatic management of the result logs, which are available via links on the individual green/red dots:

Bottom Line: Reduce Manual Effort, Speed Development Cycles

By integrating RightScale into our CI and testing processes, we are able to automate all aspects of the continuous testing, from the execution of tests to the provisioning of infrastructure needed to support these tests. As a result, weve been able to greatly reduce the manual effort involved in testing. It is difficult to estimate the efficiency gain in numbers, but it is safe to say that it exceeds 10x. Rather than consuming an entire team of 10 people for 4 weeks, we were able to run regression in 3 weeks with only 2 people dedicated to seeing all the tests through. The rest of the team was then free to respond to fixes or work on planning the next sprint.