One of the key benefits of cloud computing is that it gives you the ability to launch resources as needed to meet application demands. Some organizations that run their own private clouds are interested in leveraging cloudbursting, traditionally defined as an architecture where application workloads run fully in a private cloud until they grow beyond some threshold, after which the application tier bursts into a public cloud to take advantage of additional compute resources. While this application-tier cloudbursting sounds simple in concept, you need to be aware of several important technical and business considerations if you want to leverage this architecture. These include bridging the inconsistencies among multiple clouds as well as dealing with the security, latency, and cost issues relating to communicating between public and private clouds. While there are approaches to addressing these technical challenges, many organizations choose alternatives to the traditional view of cloudbursting that focus on deploying workloads to the right cloud at the level of an application portfolio or an application service.

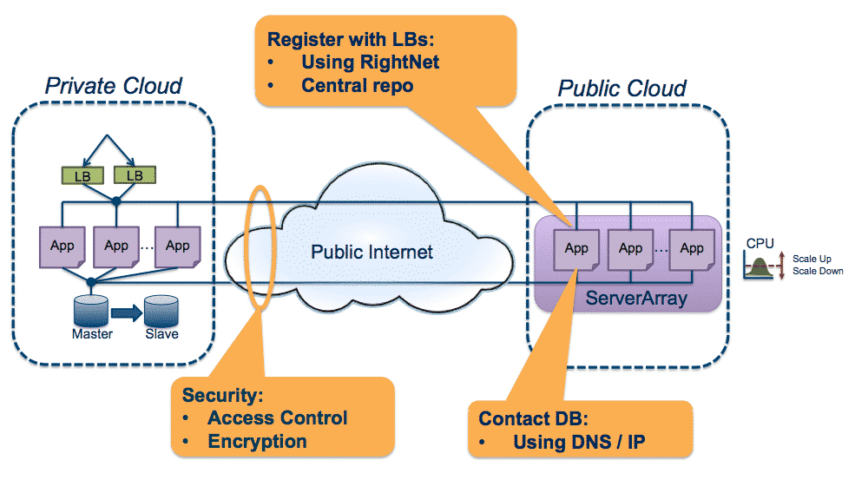

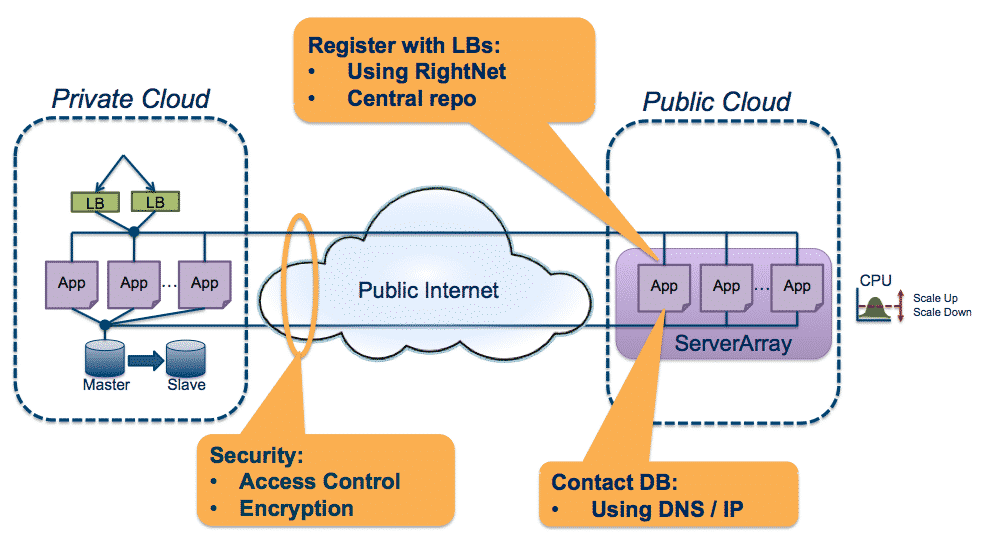

Example of a Cloudburstng Architecture

Example of a Cloudburstng ArchitectureCreating a cloudbursting architecture for an application involves ensuring that the communication latency and bandwidth between the two clouds do not negatively affect the application or user experience, which means provisioning application servers that work identically in both clouds, setting up a communication path (typically over the public Internet), putting controls in place to allow resources to access each other, and securing the communication channels. You also need management tools that cover the varying cloud environments. And once all of that is in place, you must periodically test the bursting. The main challenges with cloudbursting are:

- Creating and managing configurations for multiple different clouds.

- Creating and managing a low-latency communication path between the clouds.

- Securing communications between the clouds

Let’s look at each in detail.

Challenge 1: Managing Configurations

Cloudbursting within an application involves running the same types of resources – application servers in our example – in multiple clouds. However, there can be large variations between the cloud providers you choose, requiring you to configure and manage your applications and their technology stacks across two different environments. Some of the differences include:

- Differing underlying hypervisors and versions of hypervisors

- Different hypervisor features and machine image formats

- Cloud APIs differ in behavior as well as in the available calls

- Different clouds provide different ranges of virtual machine types and power

- Cloud network configurations differ. Some offer the concept of availability zones, others do not. Some have security groups, some have subnets and ACLs, and some have both.

- Clouds offer varying types of storage, such as object, block, persistent, and ephemeral, which may exhibit differing behavior or may not be available in any other given cloud.

This also creates downstream effects. For example, using different hypervisors and storage subsystems generally requires using different base virtual machine images that need to be built for each cloud and maintained with every operating system and security patch that comes out.

RightScale helps to address a lot of these (sometimes subtle) differences by abstracting the variations among clouds for you when you use our multi-cloud management platform and our ServerTemplatesTM, making it easier to implement hybrid cloud architectures. RightScale can launch servers that function almost identically with predictable configurations in different clouds, even if those clouds are based on incompatible technologies and inconsistent behaviors, thus providing portability across clouds. And RightScale partners with Rackspace, which provides hybrid cloud solutions that use OpenStack technologies for both the public and private cloud components.

Challenge 2: Communication Latency

In the cloudbursting example above, the application tier is located in the public cloud and processes data that is being read from a database on-premise. This works reasonably well because that data is only being read in order to generate thousands of static pages, and the database access is not in any critical latency path.

However, latency and throughput can become a challenge if your application needs to move a significant amount of data between your application tier and database when they are located in different clouds. This latency issue is especially pronounced when the communication is over the public Internet. Because app-to-database communication can involve many round trips per front-end request, even relatively small latencies can add up rapidly.

There are several approaches to reducing this latency. For instance, you can use:

- Cloud providers that host your private cloud colocated with their public cloud, such as Rackspace, SoftLayer, and Logicworks.

- Data centers from companies such as CoreSite and Equinix that offer the ability to connect dedicated private resources using very high-speed cross-connects to multi-tenant public cloud resources.

For many applications, the frequency and volume of application-to-database connections is not optimized, requiring more bandwidth. The cost of provisioning the communication path can be surprisingly expensive, whether it runs over the public Internet or using a form of leased line. In addition, some cloud providers have charges for data egress or ingress. Therefore, optimizing this segment of the communication stream reduces latency and lowers the cost of the bandwidth needed to meet your performance goals.

Challenge 3: Handling Security

Finally comes the issue of securing the communication path between the clouds, which means setting up encrypted channels and dealing with the inevitable routing issues, as well as compliance and audit requirements. Depending on application availability requirements, these communication channels may need to be redundant at every level, which increases the routing complexity and adds a cost multiplier to equipment and provisioned pipe costs. Dynamic server allocation creates additional complexities, as new servers added to the array must dynamically join your VPN.

There are several approaches to creating a secure communications channel. RightScale partners with a number of companies that have solutions to address these issues, and cloud providers increasingly offer solutions as well. As an example, AWS offers VPN gateways to its VPCs (Virtual Private Clouds) to handle encryption and some of the routing issues. With new features in OpenStack, it is likely that OpenStack-based providers such as Rackspace will have solutions for this soon. Windows Azure also offers secure site-to-site communication options. In addition, VNS3 from CohesiveFT and CloudOpt’s WAN Optimizer can also be part of the solution.

Cloudbursting Alternatives

Many customers take alternative, and potentially simpler, approaches to defining hybrid cloud architectures.

Application Portfolio Approach

The idea here is simple, and many RightScale customers do this: Rather than trying to make an application span multiple clouds, just move entire applications. The key difference in this use case is that all the servers for an application are located in one cloud – either the private cloud or the public cloud – and there is no communication between the clouds, or, more typically, the communication is minimal.

The overall strategy is to identify those applications that are a good fit with the public cloud and those that are a good fit with the private cloud and start moving applications to maintain an appropriate capacity headroom in the private cloud. The best cases for public cloud are external-facing applications with needs for scalability.

By cloudbursting entire applications, you don’t have multiple configurations to manage, the communication needs are greatly reduced (or even eliminated altogether), and your overall architecture is greatly simplified.

Service-Oriented Approach

As another alternative, an application can be separated into multiple, unique, and loosely coupled services, and each can run in different clouds. This scenario is very similar to the way AWS’s Jeff Barr, who originated the term, describes cloudbursting. Each of these services can be accessed via a protocol that is designed for some latency and optimizes bandwidth, for example through a web API. The services are designed to function autonomously, such that they do not need to share data other than through each service’s API. This greatly simplifies the integration of the various services (or tiers) within the workload, allowing each service to run on the cloud that is most appropriate.

Cloud Management

Take control of cloud use with out-of-the-box and customized policies to automate cost governance, operations, security and compliance.

Conclusion

In working with many of our customers, we have found that application-tier cloudbursting requires a high level of technical sophistication and correspondingly higher costs. Depending on your needs, there may be alternate ways to achieve essentially the same benefits.

At the same time, public cloud providers are addressing some of the communication challenges with various flavors of direct connect offerings. These approaches should help reduce the barriers between clouds. Other technology providers who partner with RightScale for security and WAN optimization solutions can also be part of the solution.

If you are contemplating using multiple clouds concurrently, we can help you minimize complexity and avoid headaches. Contact us to speak with one of our cloud solutions experts.